18 years ago, I wrote my first computer program.

Since then, writing the code was the hard part.

Today, it isn’t.

Over the last ~18 months, AI crossed a quiet but important threshold in software development. Not because it became perfect, but because it became good enough often enough to move the center of the job.

The technology has evolved from autocomplete on steroids into agents that can plan, execute, and iterate on development tasks with surprising autonomy.

I’ve found myself swinging between two extremes: either “AI will replace developers altogether” or “AI is overhyped and produces fragile code”. Neither view survives contact with real-world usage.

What follows are not predictions. Just some of my thoughts and observations from a year of shipping code with AI.

Climbing the abstraction ladder (again)

At its core, software development has always been about expressing intent. What keeps changing is how much of the how we must spell out.

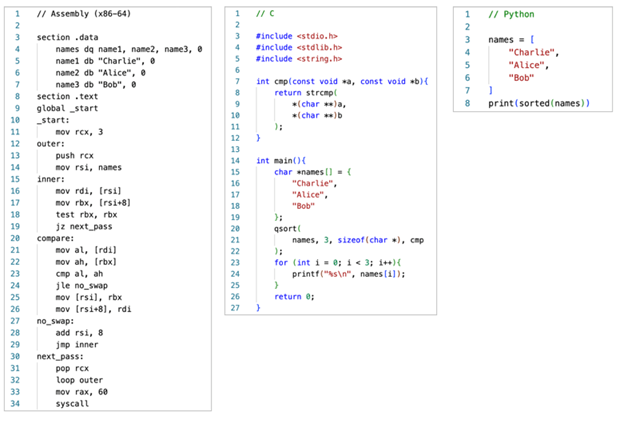

A small but familiar task (sorting a list of names alphabetically) shows this shift clearly:

Forty years ago, Assembly required 34 lines of highly technical, fragile instructions (managing registers, memory addresses).

Then C moved us away from registers, but we still had to manage memory pointers and write explicit imperative logic.

Then Python (and similar high-level languages) pushed abstraction further. Code became more declarative, and the same task could be expressed in fewer lines.

Now, with LLMs, a single natural language instruction can generate code that translates intent directly into executable instructions.

Each step didn’t remove engineering.

It concentrated it.

When machines do more of the typing, humans must do more of the thinking:

- defining constraints

- anticipating edge cases

- validating outcomes

Writing fewer lines of code doesn’t reduce judgment.

It raises the stakes of each decision.

From pottery to 3D printing

Beyond writing fewer lines, something more fundamental is shifting. Code itself is becoming less personal.

Historically, engineers were deeply connected to their code.

Style mattered. Elegance mattered.

People would rewrite working code because it didn’t match their preferences. Code was a kind of signature, a badge of craft and experience.

I still remember spending five minutes hunting for the “right” variable name. Not because I was slow, but because compressing meaning into something readable felt important. It felt like a craft.

Today, that level of attachment feels like a luxury. In those same five minutes, I can generate hundreds of lines of code, explore multiple designs, and run test cases to confirm the behavior.

The analogy that keeps coming back to me is pottery versus 3D printing.

If you’ve ever watched a potter work, you know what I mean. The craft is intensely personal. The time to finish a cup depends on the clay, the artist’s mood, and countless subtle adjustments.

In contrast, 3D printing feels different: standardized, repeatable, engineered.

Less romantic. Less “handmade”.

And yet, the process isn’t less creative overall. It simply reorganizes where creativity lives.

A typical 3D printing workflow has three steps:

- Design the 3D model

- Let the machine print the object

- Validate and refine

That maps almost perfectly onto AI-driven software development:

- Define specs and constraints (human ingenuity & intent)

- Generate the implementation (AI automation)

- Review, test, and refine (human judgment)

This is what I mean by moving from handcrafted to plan-crafted software.

The craft didn’t die.

It moved upstream.

I’ll be honest: from a certain perspective, this is a sad moment for the true “pottery artists”, the passionate coders who take pride in elegant, hand-shaped code. If you asked a master potter to hang up their apron and start operating a 3D printer, they would revolt. They would say the output is generic and the process is soulless.

I know that feeling because I felt it myself.

The gut punch

I vividly remember a moment in November 2024 when, for the first time, I saw a computer generate code that was better than what I would have written.

Not “good enough”. Not “decent”. Better.

The reaction was visceral.

Excitement.

Fear.

And, unexpectedly, loss.

It felt as if something valuable were being taken away from me. I had spent years honing the skill of writing code, learning to debug obscure errors, and memorizing libraries. Suddenly, that “badge of expertise” felt fragile.

Some of my best memories of building software are about being “in the zone”, balancing mental models while typing, compiling, running, and seeing that moment of pure satisfaction: YES! It works.

At first, AI made it harder to reach that state. It felt like cheating. Like skipping the struggle. I kept asking myself: If I’m not writing the code, am I still an engineer?

Eventually, I stopped resisting. I began treating AI not as a replacement, but as a force multiplier. And slowly, I discovered a new kind of flow.

Instead of chasing the right set of instructions, I was chasing better ways to describe what I wanted and to validate the result.

I put down the clay and the apron, and I started tinkering with the 3D printing machine. And to my surprise, it was fun.

After a year of shipping production systems this way, I feel a renewed passion for building software. I am no longer shaping the clay by hand. I am focused entirely on the shape of the vessel.

The future is already here

It was in March 2025 that Anthropic’s CEO Dario Amodei claimed:

“In three to six months, AI will be writing 90% of the code. In 12 months, it may be writing essentially all of it.”

At the time, I was skeptical. It sounded like classic AI CEO hype.

But sitting here in January 2026, with the latest generation of models, I’m starting to think he was right. For many of my use cases, it’s already true. AI is generating 90% of the code I commit.

The productivity gains have been real, though they vary by task.

For smaller, well-defined work such as building generic features, writing scripts, or automating repetitive logic, we’ve seen up to 10X improvements.

For larger, more complex projects, the gains we achieved were closer to 2X. That may not sound dramatic, but doubling delivery speed meaningfully changes what teams can attempt and how often they can iterate.

One concrete example: a solution involving backend services, a frontend, and non-trivial business logic was estimated at five to six months using traditional methods. By leaning into an AI-driven workflow, we shipped a production-ready version in two and a half months.

Pragmatism still matters.

There are still plenty of occasions where relying totally on AI is a mistake. In massive, legacy codebases with poor documentation, or in contexts requiring extreme novelty, the models will hallucinate.

But the gap is closing. Fast.

When AI fails, it’s usually not because it can’t reason. It’s because I gave it an unclear intent, insufficient context, or weak constraints.

And here’s the thing: the tools we have today are likely the worst versions we’ll ever use.

As they improve, what will matter most is precision of intent, rigor in validation, and the ability to orchestrate AI toward outcomes that matter.

If you’re a “pottery artist” developer, I understand the sadness.

I felt it too.

But we cannot let that nostalgia stop us from picking up the new tools.

The clay is drying.

The printers are warming up.

It’s time to learn how to operate the machine.

Paul Cirstean, Head of Innovation @Yonder

Banner by Alex Jones on Unsplash

Book your free

1-hour consultation session

Yonder offers you a 1-hour free consultation with one of our experts. This is for software companies and enterprises and focuses on any concerns or queries you might have.

STAY TUNED

Subscribe to our newsletter today and get regular updates on customer cases, blog posts, best practices and events.