Many companies still run software built on programming languages that very few people still use, know, or learn. When you can no longer hire people who understand your codebase, product continuity becomes a risk. Every change becomes slower, more expensive, and more fragile.

At some point, the question became inevitable: Can AI radically accelerate legacy migration without compromising quality and stability?

Paul, Software Developer and Tech Lead at Yonder, decided to run an experiment to test whether AI could meaningfully support legacy migration, beyond small productivity gains. We had a large internal project, built on an old technology stack, a perfect environment to experiment safely, but with real constraints. The benchmark was clear: in a previous project, migrating a similar module using a traditional approach took a few months.

“AI-driven migration is new territory, and the only way forward is to experiment and see what works. There are very few moments like this in a career, when a real shift is happening. I genuinely believe we might not be writing code in a few years, and that’s exactly why I wanted to run this experiment and convince myself first” – says Paul.

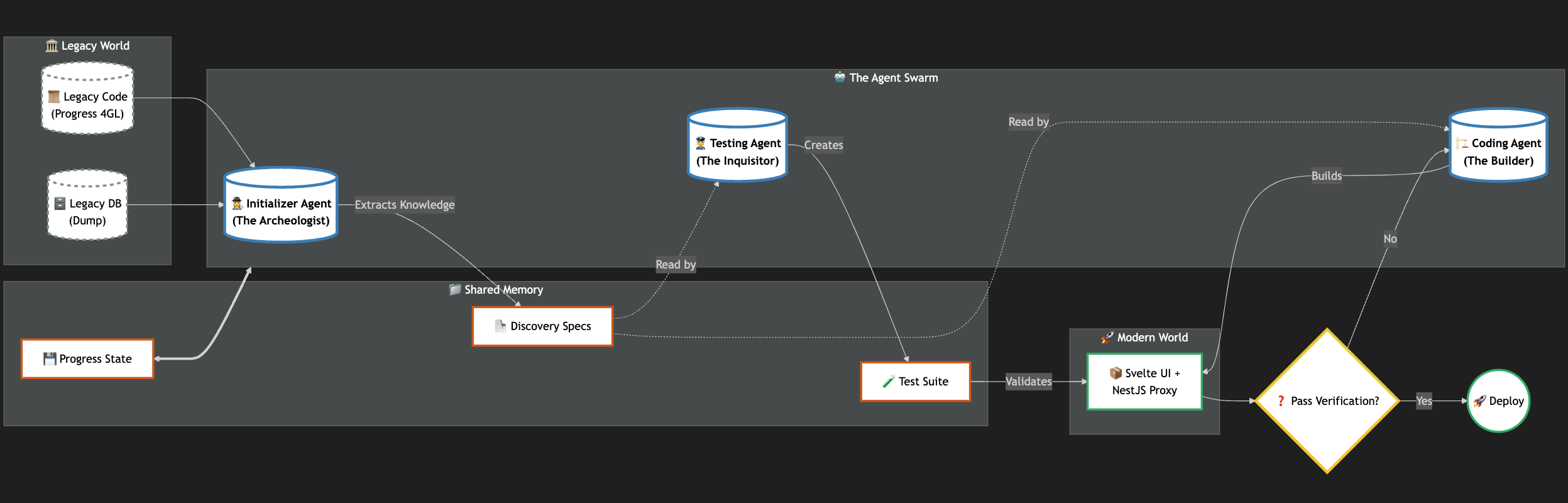

Tech stack and approach

- Legacy language: Progress

- Frontend: Svelte

- Backend: Node.js

- AI tooling: Claude Code (Anthropic)

What made the difference was how the work was split: one thing was giving agents chunks they could solve independently, without compressing context. Another was working with specialized agents.

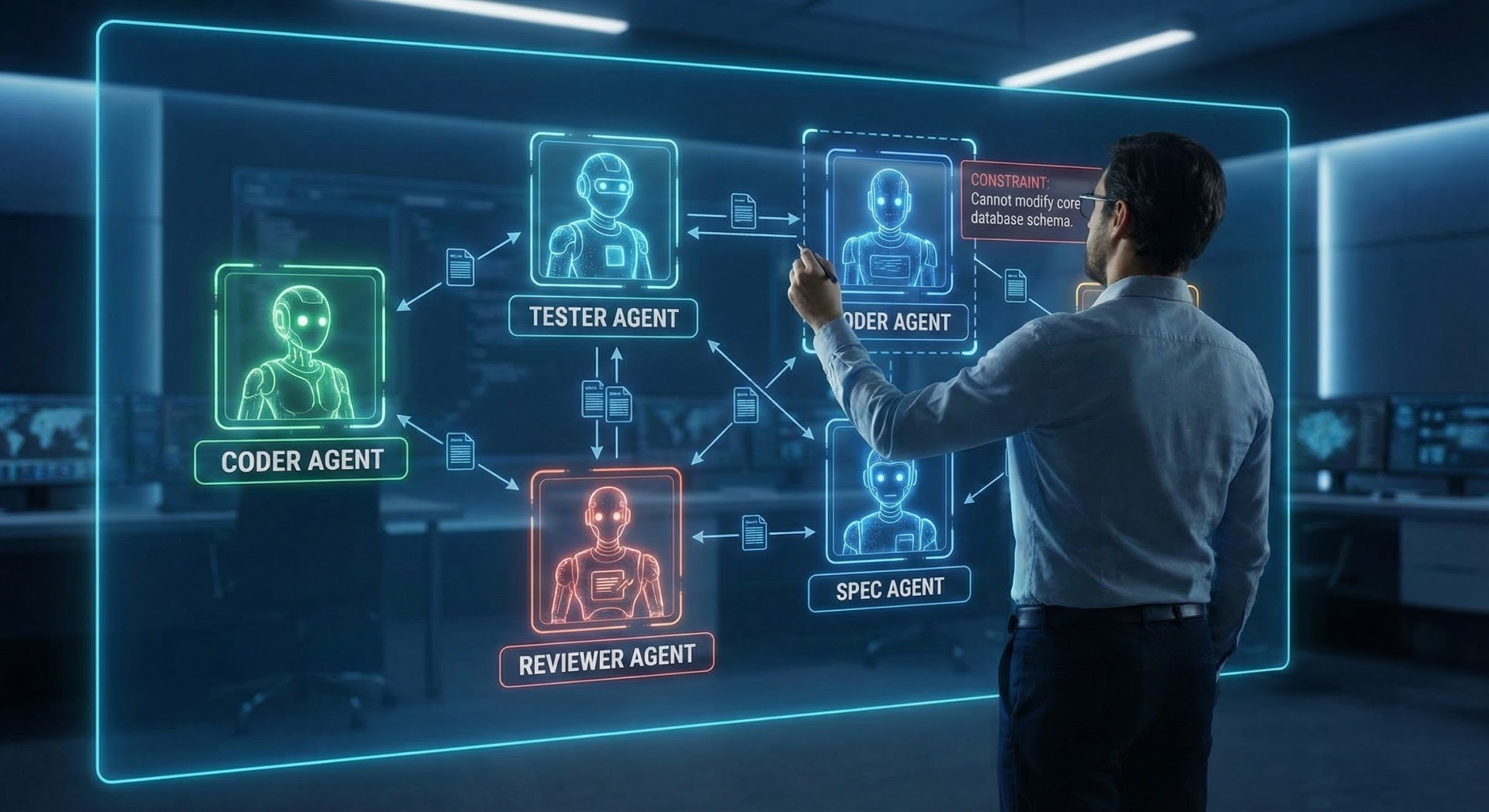

“AI agents have memory limits. When conversations get too long, information gets compressed, details are lost, and mistakes or hallucinations start to appear. Instead of fighting this limitation, I created an agent harness: an orchestrated system of specialized agents – one writing code, one writing specifications, one focused on testing, and one handling code review. Each agent received small, well-defined pieces of the system and only the context it truly needed. This intentional fragmentation required time and discipline upfront, but it laid the foundation for reliability and autonomy later on” – Paul explained

Implementation and challenges

Early on, AI tended to declare victory too soon.

“It would say it finished, but it hadn’t. That meant a lot of intervention at first, which was time-consuming” -added our colleague.

This is where feedback loops became essential. Instead of manually correcting results, the system was redesigned so that agents could validate their own outputs, failed checks triggered retries or refinements, and constraints guided correction instead of human micromanagement.

Very quickly, the process shifted toward spec-driven development:

- Heavy investment in clear specifications

- Explicit constraints: what the AI can and cannot change

- Breaking large systems into small, solvable problems

- Clear success criteria for every task

The real WOW: long-running agents and autonomy

With better specifications and feedback loops in place, something fundamental changed: the process of migration no longer needed constant human supervision. AI agents were able to:

- Work autonomously for 5–8 hours at a time

- Pick up tasks directly from GitHub issues

- Write, test, and refine code without human input

“At some point, I realized I could just go to sleep, and the application would keep migrating. In later runs, entire parts of the application were migrated end-to-end without any human intervention. One of those runs lasted over eight hours, fully autonomous.”

Results that matter

- Time to delivery: from months to less than one week

- User experience: modern frontend, updated backend, simpler maintenance

- Business impact: a concrete answer for clients struggling with legacy systems

Lessons learned (and what scales)

“The biggest learnings were surprisingly simple: AI performs best on small, well-defined problems, and clear limits drastically reduce hallucinations. At the beginning, I intervened constantly, not because AI was bad, but because I didn’t yet know how to set the right boundaries” – Paul concludes.

Thank you, Paul, for taking the time to explore this and share the journey with us.

What we’re seeing is a real shift in the role of humans: from writing code to designing how AI thinks, what it’s allowed to do, and how outcomes are validated. Work moves from execution to architecture and judgment, and once you see that shift in action, it’s hard to unsee it.

By Paul Petri

Sr Software Developer & Tech Lead

STAY TUNED

Subscribe to our newsletter today and get regular updates on customer cases, blog posts, best practices and events.