We all know the benefits of test automation, but can test automation really advance your testing mission? Do all test automation projects succeed in adding the desired value? The reality is that some test automation efforts, unfortunately, are not successful; they fail soon after they have been initiated because of different pitfalls.

I have faced various challenges along my testing journey, experienced different situations, successful ones, but also failures that made me learn the hard way on how to avoid the extensive trial and error and how to proceed when embarking upon the promise of test automation.

Here are 13 “lucky” lessons that I’ve learned during my years in testing.

1. Know your automation business case

Know the value of the testing case.

Context: I found myself, in multiple cases, in the position of having to convince others about the need for test automation: testers, developers, managers, product owners, clients. Let’s go through the most important criteria that you would need to consider:

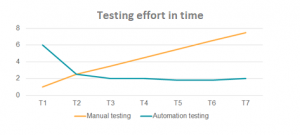

The initial investment, based on experience, can be a real hold back at the beginning due to the initial higher costs and uncertainty. In the long-term, it can really save time and effort. When new functionality is added, the amount of manual testing effort grows, and from sprint to sprint, the need for additional testing effort is there continuously. For the automation part, the initial investment can be higher at the beginning, but it is stable and less costly over time, reducing additional extra effort (Return of investment).

The initial investment, based on experience, can be a real hold back at the beginning due to the initial higher costs and uncertainty. In the long-term, it can really save time and effort. When new functionality is added, the amount of manual testing effort grows, and from sprint to sprint, the need for additional testing effort is there continuously. For the automation part, the initial investment can be higher at the beginning, but it is stable and less costly over time, reducing additional extra effort (Return of investment).

In general, it is more reliable and much quicker to execute repetitive standardized tests that cannot be skipped and which are not subject to human error when such tests are run manually (Reliability). It is very straightforward to reuse tests for other use cases or execute the same tests on different browsers (Reusability).

The execution time (nightly or at any new build) is much shorter, and the team receives the feedback very quickly, dealing with an early defect detection (Reduced testing time). You can write new automated tests and add them to the automation suite, so more features are properly tested at each execution. More time is gained for writing new ones or finding complex scenarios at exploratory tests (Higher Test Coverage).

When having to convince someone about the need for test automation, I learned that knowing the business case, the test automation benefits, and the added value is essential, and most importantly, mapped on product context.

2. Focus on business needs

Don’t think just about test automation but think about the added value

Context: When we say ‘automation,’ a lot of people are talking about new magic tools that appear overnight, the personal need or pleasure to write automation tests, new test design techniques, but … are all of these going to bring any value to the product? If we have a small project (3-5 months), an unstable product in continuous changes, or a product that is very difficult to automate, is our automation going to bring any value? Is it better to invest a lot of effort in detailed UI test automation, or we should also plan for a more time-efficient approach for back-end testing?

Context: When we say ‘automation,’ a lot of people are talking about new magic tools that appear overnight, the personal need or pleasure to write automation tests, new test design techniques, but … are all of these going to bring any value to the product? If we have a small project (3-5 months), an unstable product in continuous changes, or a product that is very difficult to automate, is our automation going to bring any value? Is it better to invest a lot of effort in detailed UI test automation, or we should also plan for a more time-efficient approach for back-end testing?

I’ve learned that the tool is not important in test automation. It’s there just to help us put our approach in place. The most important part is how we construct our testing approach to establish our testing mission to bring more value to the delivered outcome. I’ve learned that it’s ok, in specific project contexts, to say no to automation if it does not bring any value to the product. It is ok then to focus on what will bring value and on the insights that enable us to make better decisions and better products.

3. Adjust to the context

“The value of any practice depends on its context” (Context-driven testing)

Context: The test automation process was working successfully for project A. The Team was happy; the client was happy; we had high test coverage, running tests, and having a stable product. What a great idea to set the same process, framework, technology, and automation in place for project B, so we can achieve the same results! An easy thing to do. And easy to implement as well. But the results were quite the opposite. The team was not happy as different techniques and technologies in project B added overhead. The client wasn’t happy as they had a different perception, and the results they expected were unclear. The written tests were very slow due to project complexity and stabilization, and tests failed because of quick changes and a lack of time on test maintenance.

I’ve learned that I need to take into consideration specific information from the context, like product business scope, the architecture and technology, the culture, the practices, and team, and apply and adjust the best practices to map in the context and not to map the context to the best practices.

Every project is different, and to gain value and results, we need to focus on its context and clarify our automation mission and way of working based on it: “The value of any practice depends on its context.”

4. Build your own Test Strategy

“Plan your work for today and every day, then work your plan” (Margaret Thatcher)

Context: I’ve joined a bigger project which was formed by 6 SCRUM teams as a test automation lead. I’ve noticed that every team had their own way of approaching automation: some of them writing automation in each sprint, some had a coverage of 5%, others of 20%, and some had not written it at all. There were no coding standards, no practices, no CI, no maintenance of tons of failed tests, no integration testing. The application was very unstable and entered into a 4-months stabilization period. The price was paid.

When having multiple teams on the same product, or even one team on a product, everyone must be on the same page. We need to work together in defining a test strategy that aims to address the product mission, how possible risks will be mitigated, and follow the same goal. An Automation Test Strategy will help by describing the testing approach of the software development cycle. It includes operations, practices, activities that will take place, mitigate the possible risks, and plan the actions based on that (test mission, risk mitigation, approach, test coverage, test types, tools, team collaboration, environment, and so on). This way, testers have a well-defined common goal to collaborate and work on. Developers and product owners have transparency on what is works and whatnot.

When having multiple teams on the same product, or even one team on a product, everyone must be on the same page. We need to work together in defining a test strategy that aims to address the product mission, how possible risks will be mitigated, and follow the same goal. An Automation Test Strategy will help by describing the testing approach of the software development cycle. It includes operations, practices, activities that will take place, mitigate the possible risks, and plan the actions based on that (test mission, risk mitigation, approach, test coverage, test types, tools, team collaboration, environment, and so on). This way, testers have a well-defined common goal to collaborate and work on. Developers and product owners have transparency on what is works and whatnot.

I’ve learned that to succeed, we need to have a clear test strategy on how we are going to approach things, how to obtain our scope and deliver qualitative releases, by sharing a common vision.

5. When things are unclear, start with a Proof Of Concept

Proof them right or … proof them wrong

Context: I’ve started test automation on a product, written in Angular, so I’ve decided to use the best tool promoted by the community for that. After some days of working at the framework and test design, I’ve discovered that the tool does not support “drag and drop” on the main browser, which is one of the main functionalities of the app. Everything that I had written was useless and I needed to start over.

Therefore, it’s important to start the automation process with a tool adoption, a 1-2 days proof of concept on the proposed automation technical solution to validate recommended tools or libraries. Start with defining the characteristics/needs of the automation tool (cross-platform support, supported development language, features, paid/open-source tool …). Then, with 2-3 recommended tools, create the POC focusing on 3 types of scenarios: simple/most used, medium, and complex, containing most or all of the application particularities. Then finish by choosing the tool that best checks your needs.

I’ve learned that having a great strategy, but a bad choice of tooling can be another failure point of your automation project and that a POC can save you from possible future failures.

6. Get others involved

Quality as a team effort

Context: Some years ago, I wrote my first test automation framework. I was very excited! Even if I considered my code to be very good, there were some parts that I thought needed some improvement. So, I asked my development colleagues for feedback, and I was amazed at the number of useful tips and tricks and code best practices I received! It really helped me in delivering better and faster code.

Context: Some years ago, I wrote my first test automation framework. I was very excited! Even if I considered my code to be very good, there were some parts that I thought needed some improvement. So, I asked my development colleagues for feedback, and I was amazed at the number of useful tips and tricks and code best practices I received! It really helped me in delivering better and faster code.

I’ve learned that the input from the development team is really useful for some of the complex or technical code and the input from testers helps for having a better functional test coverage. And then there is the product owner who gives useful information on functionality, DevOps on CI integration, and the input from managers help to focus on the return of investment.

I’ve learned that to maximize the delivered value and to better support the team, I need to involve others in the test automation journey and treat quality as a team effort.

7. Don’t automate everything

“You can win the battle, but you’ll end up losing the war.”

Context: We wanted to automate as much as possible to minimize the manual regression. We managed to automate around 80-90% of manually defined test scenarios for one functionality that was running well, at least at the beginning. After a while, we noticed that we still do not have the proper overall coverage, and the time needed to maintain the number of tests was growing due to the amount of details we were adding. Also, when a bad piece of code was checked-in, several tests were failing because of it, and this happened regularly. We needed to investigate many tests to identify possible different defects. A single defect was manifesting itself in multiple tests, adding much noise at each execution.

I’ve learned that we do not need to automate everything. We need to keep our focus more on the critical path, what has a high business impact, what is repetitive or specific tests that are subject to human error. If I want to obtain a good test coverage at the application level, I’ve learned that I need to prioritize my automation backlog and decide what really needs to be automated. I need to balance my decision based on 3 key points: functionality risk/priority from a business perspective, how much effort is required to automate, and how much effort is needed to do the test manually.

I’ve learned that too many automated tests will add too much noise, and that we need to prioritize focusing on coverage and not the number of tests because “we can win a battle on short term but will lose the war in the end.”

These are just a few of the test automation lessons I’ve learned throughout my test automation journey. Next month part two of my 13 lessons learned. But please let me know your lessons learned. I am looking forward to reading your stories!

Codruta Bunea

Test Lead and Expert

codruta.bunea @ tss-yonder .com

STAY TUNED

Subscribe to our newsletter today and get regular updates on customer cases, blog posts, best practices and events.